Introduction

Enterprises face three broad challenges when it comes to adopting AI. These are especially relevant to organizations that have traditionally not been in the business of software engineering and AI:

- Identifying the right opportunities (Part I)

- Product development

- Avoiding common pitfalls

An approach to identifying the right opportunities – the Double Diamond – was presented in Part I of this three-part series. Here we will focus on the second challenge: Product development; or how to create an environment where successful AI products can be built confidently.

AI Product Development

Once the right problem has been identified, it’s time to build the solution. Easier said than done for organizations that have not been set up to engineer software products, let alone AI products. Even companies that are software engineering companies at heart, are not insulated from failure and have a long list of abandoned products. See the Google Graveyard, for example.

But AI product development does not have to be a source of frustration when a few principles are taken into account. These are well-understood in the software engineering industry. I’ll highlight a few of them below and how they apply to AI development specifically.

1. People

It’s alarming that even to this day some organizations try to “save” money by not adequately staffing what is essentially a software engineering project. Every little dev shop has by now figured out that building quality products requires a project manager, Scrum master, designers, developers, and QA engineers. AI products require additional resources, such as data engineers, data scientists, machine learning engineers, etc.

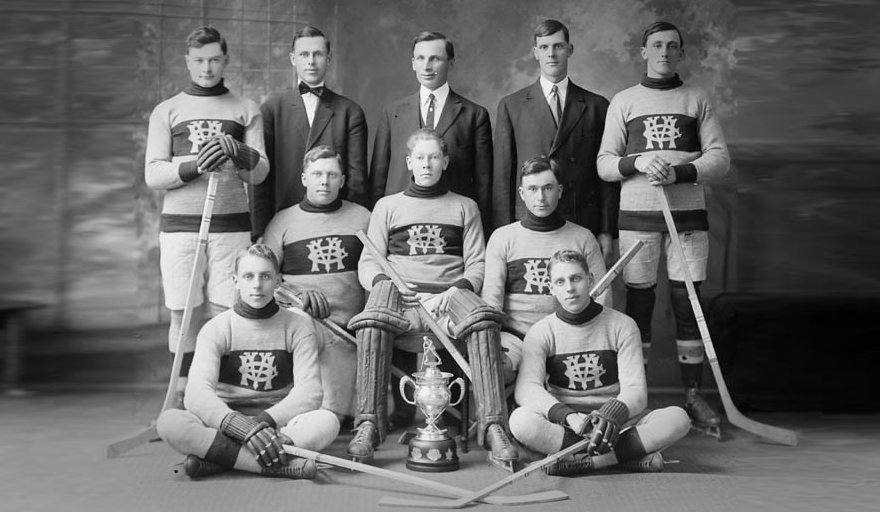

A complete AI product team. Business stakeholders in ties. (Public Domain)

Assuming that a team of data scientists is able to deliver robust software products continues to be the reason for many failed or massively delayed projects. It’s paramount to hire the roles required to build products, not just models.

High-performance teams can do amazing work during the experimentation and proof-of-concept phase, but even they will reach their limit if you ask them to scale out a solution without the dedicated resources necessary to build and maintain robust cloud architecture.

Some organizations find it challenging to attract this kind of talent. In those cases, start by investing in “seed” talent, i.e. people who have a network, perhaps in the start-up world. You should aim for at least 20-30% of highly skilled people in the early days of your organization’s AI transformation. These people can spearhead the changes necessary to adopt modern engineering practices and demonstrate how it’s done by example. Don’t forget to develop a talent strategy with your talent/HR team that allows you to attract and retain the right kind of talent.

All of this doesn’t happen overnight. It’s not unusual for organizations to make multiple attempts to build the right talent pool. Sometimes they oscillate between “all-in” (hire the A-Team) and “back-to-basics” (let IT do it) before they converge on a better understanding of their AI talent needs.

Suffice to say, the talent an organization is able to attract and retain defines what can be achieved. An incomplete product team will build incomplete products.

2. Process

AI products are built in the context of an organization. Any development effort is reliant on the processes that govern the collaboration across teams and the access to the right tools and infrastructure.

Agile and Scrum are widely know and will not be discussed here. However, there are two processes that are essential to AI product development and somewhat independent of agile: enablement via the right tools and discovery vs engineering.

Enablement via the right tools

Data scientists and machine learning engineers need the right tools to succeed. Nobody would equip a professional cycling team with city bikes, because that’s all they need to cross the finishing line.

It’s a sad fact of this industry that not all data science teams have access to adequate version control systems, developer-friendly computers, open source tools, live data or cloud infrastructure. These are very basic preconditions of success and an organization is undermining itself if talent is handicapped this way. It’s also disrespecting to not give someone the right tools to succeed at their job. Top talent will leave and an organization’s reputation as an attractive employer for AI talent will suffer.

Jan and Huub are trying out their new MacBooks. (Nationaal Archief)

Beyond basic enablement, data science teams should use the tools that they can master and have a plan to learn the tools that are required. In practice that means staying away (at first) from frameworks that are overkill or beyond the team’s ability, like, for example, Kubernetes. On the flip side, teams that are working exclusively on Jupyter notebooks need to develop a plan to adopt the tools for building production-ready code, e.g. packaging and GitLab CI/CD.

Having a technology adoption strategy in a fast-moving ecosystem like AI is key. This includes giving the team the time to learn required tools and evaluate promising tools. The hiring process should be synchronized with the technology strategy to ensure that new hires have practical experience where needed and can possibly teach the team new tools. However, technology is there to enable teams and shouldn’t become a distraction or worse an end in itself.

Discovery vs Product Engineering

Building a solution that derives insights from data requires two fundamental types of activities: discovery and product engineering1. The distinction is necessary, because the data often affects the solution design. Put differently, serious engineering cannot commence before the data (and therefore the opportunity) is sufficiently understood.

Less AI-mature enterprises often get these phases mixed up and advances in discovery are sometimes viewed as product development progress. Business stakeholders can get frustrated when they are presented with discovery findings, but then have to wait a long time to see the same results in a fully scaled-out application. Similarly, data science teams can get caught up in an infinite discovery cycle where new findings beget further rounds of discovery.

It’s thus important to mitigate the risk of discovery by properly scoping and time-boxing the activity with the goal of flowing the findings into the final product. At the same time, any engineering activity should not be rushed until there’s evidence in the data that the activity if valuable.

For example, building a forecasting engine requires testing the hypothesis that a prediction of sufficient accuracy can be made. The forecasting accuracy can be evaluated during the discovery phase without investing into a scaled out forecasting engine. The next step is then to eliminate the risk of technical feasibility, i.e. can the forecasting be run across all data at the desired frequency. This phase should be focused on the implementation and not be derailed by further feature enhancements to the model. The model’s feature enhancements can then be addressed in the next round of discovery and product engineering.

There are frameworks, such as CRISP-DM2, that formalize the transition between different phases during the iterative development of AI products. It’s helpful to adopt a process that facilitates the flow of discovery findings into the AI product to establish a healthy cadence of product advancement.

Conclusion

The right team and processes are instrumental to an organization’s success when it comes to building AI products. Although most teams have to operate under resource constraints, it’s still worthwhile to develop a talent and a technology enablement strategy. These strategies make sure that the available resources are invested where they matter most.

We will address the final challenge to AI adoption (“avoiding common pitfalls”) in the upcoming Part III of this series. Read about “how to identify AI opportunities” in Part I.